Why are Large Language Models general learners?

a quick dose of intuition

This post assumes you’re familiar with Large Language Models as next-token predictors. If you’re not, read this first:

Because if you think about it, what does it mean to predict the next token well enough? It's actually a much deeper question than it seems. Predicting the next token well means that you understand the underlying reality that led to the creation of that token. - Ilya Sutskever, OpenAI Chief Scientist

Ten years ago, if you told me you could train a model to do next-token prediction and then have that model pass the MCAT, the LSAT, and write code, I would have told you that was unbelievable. And I would have meant that in the literal sense. I wouldn’t have believed you.

Instead, I would have asked, “How can predicting the next token in a sentence have anything to do with developing abilities in law or medicine?”.

Usually when you train a machine learning model on a task, it learns only that task, so if you train a model to predict fraud, it only predicts fraud. If you train a model to categorize photos of dogs into dog breeds, it only categorizes breeds. Why are large language models and next token prediction different?

That’s the question we’ll address in this post.

Necessity is the mother of invention

Predicting the next word1, it turns out, requires some understanding of reality. This principle holds whether you’re solving simple arithmetic problems or deciphering a three-line poem.2

Consider the simple “next word” prediction problem below:

345 + 678 = _

Try predicting the missing word (number), but with one caveat: don’t use any rules of arithmetic. Hard, right? It isn't even clear where to start.

But if we allow ourselves to use the rules of addition, prediction becomes simple. Like in elementary school, we apply the fundamental laws of arithmetic. We add each column from right to left, carrying any number over nine to the next column. The seemingly complex problem folds neatly into an answer: 345 + 678 = 1023.

Now let’s switch gears to a different prediction problem.

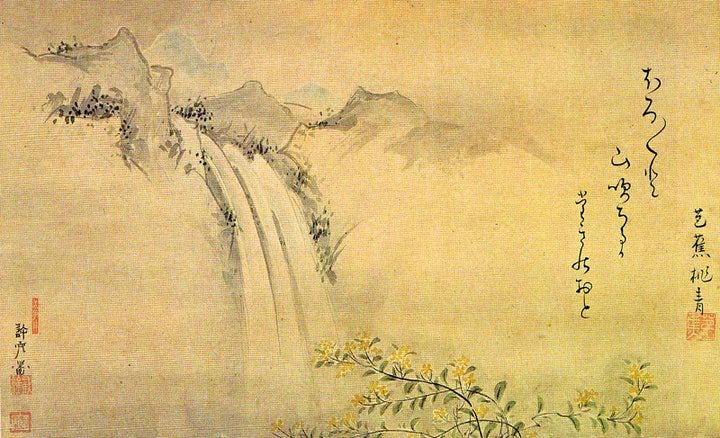

Try predicting the missing word in this three-line poem by Bashō:

Quietly, quietly,

yellow mountain roses fall –

sound of the _

What's the missing word? Without any specific context, it’s probably as perplexing as predicting the “word” in the addition problem without arithmetic.

Luckily, here too, there are rules. Our poem is a Haiku. Haikus have a 5-7-5 syllable pattern and often dance around themes of nature or fleeting moments in time.3 If the model (and you) can recognize this as a Haiku and understand its underlying rules, predicting the next word becomes significantly more straightforward, and the model will do a much better job at its objective.

Using these rules, we can better guess our missing word. We've established it needs to be two syllables to adhere to the haiku's 5-syllable closing line. It also needs to fit within the context of the haiku's theme. With these two constraints, our chances of landing on the absentee word “rapids” soar.

These examples might feel overly simplistic. Yet, they demonstrate a crucial point: a deeper understanding of reality boosts performance on next-token prediction tasks. This is how a large language model expands its knowledge into complex domains like medicine or law. It’s all in service of predicting the next token.

With this intuition, it may be slightly less surprising that when fed with a deluge of medical texts and given massive amounts of compute, an LLM eventually learns enough to pass the US medical licensing exam.

Necessity is the mother of invention—even for AI.

Technically “token,” but I’ll speak loosely from here on out.

A note for the more technical readers: I’ve provided examples where understanding the underlying phenomena helps humans predict the next word. I don’t mean to suggest that LLMs are doing precisely what we do, merely that understanding the underlying phenomenon is generally helpful for prediction. In fact, for my Haiku example, LLMs will have a tough time, given that tokenization will interfere with the LLM’s ability to count syllables.

You may have noticed that the above poem violates this rule in English since “quietly, quietly” is six syllables. In the original Japanese, it’s five.

Funny story..I talk to my AI in a free thinking form.sometimes it can't answer cause SST gets lost in my layers of thinking