If AI is the Wild West, does that make data the new gold? - Matthew McConaughey1

Providing data for AI is big business. As AI usage continues to explode, the value of high-quality training data has skyrocketed.

In March, Google and Reddit2 made headlines with a $60 million data licensing deal that gave Google rights to train AI models on Reddit’s vast trove of user-generated content.3 This marks a significant shift in Reddit's monetization strategy. Data licensing revenue grew from less than 2% of total revenue in 2022 to 10% by Q2 2024, representing a staggering 690% year-over-year growth.4

Reddit is not alone in capitalizing on this trend. Other tech companies are following suit: Stack Overflow, a popular programming Q&A site, allows OpenAI to train its GPT models on their content, while Shutterstock, a major stock image provider, has partnered with OpenAI to supply images for training DALL-E, OpenAI’s image generation model.

And the data gold rush isn't confined to Silicon Valley. OpenAI now licenses data from prestigious publications like the Wall Street Journal, Time magazine, The Atlantic, and Vox, while Meta has partnered with Universal Music Group5 for song data and has considered acquiring the publishing house Simon and Schuster for access to books from their extensive literary catalogue like The Great Gatsby and Catch-22.6

What incentives do Google, OpenAI, and Meta have to pay for this data? That’s the question we’ll answer in this post.

Why models are hungry for data

Imagine a student who, for a year, is told to self-teach in a library. The library is sparse and contains only a single physics textbook at the high school level and nothing else. That student would be unable to learn chemistry or history. They also wouldn't be able to teach themselves quantum physics. The same is true for LLMs and their training data sets. LLMs need data for both knowledge breadth and more domain-specific mastery.

LLMs learn by predicting the next word in a sequence. But like our student in the limited library, they can only learn from the data they're exposed to. If a model is solely trained on Harry Potter, it won't be able to write like George Saunders, answer questions about quantum computing, or discuss the origins of trap music. But that model, when asked a question, would respond in J.K. Rowling's writing style — LLMs mimic their training sets.7

To expand an LLM's capabilities, we need to diversify its "library." For instance, for the model to write like George Saunders, it’d need to be trained on texts written by Saunders like Lincoln in the Bardo and Tenth of December. Similarly, to discuss quantum physics, we'd need to include advanced physics texts in the training data, and the more advanced texts we included in the model’s training set, the better it would perform.

Put another way: an LLM’s capabilities directly reflect the quality, diversity, and sheer size of its training data.

For more on how the volume of training data impacts model performance see:

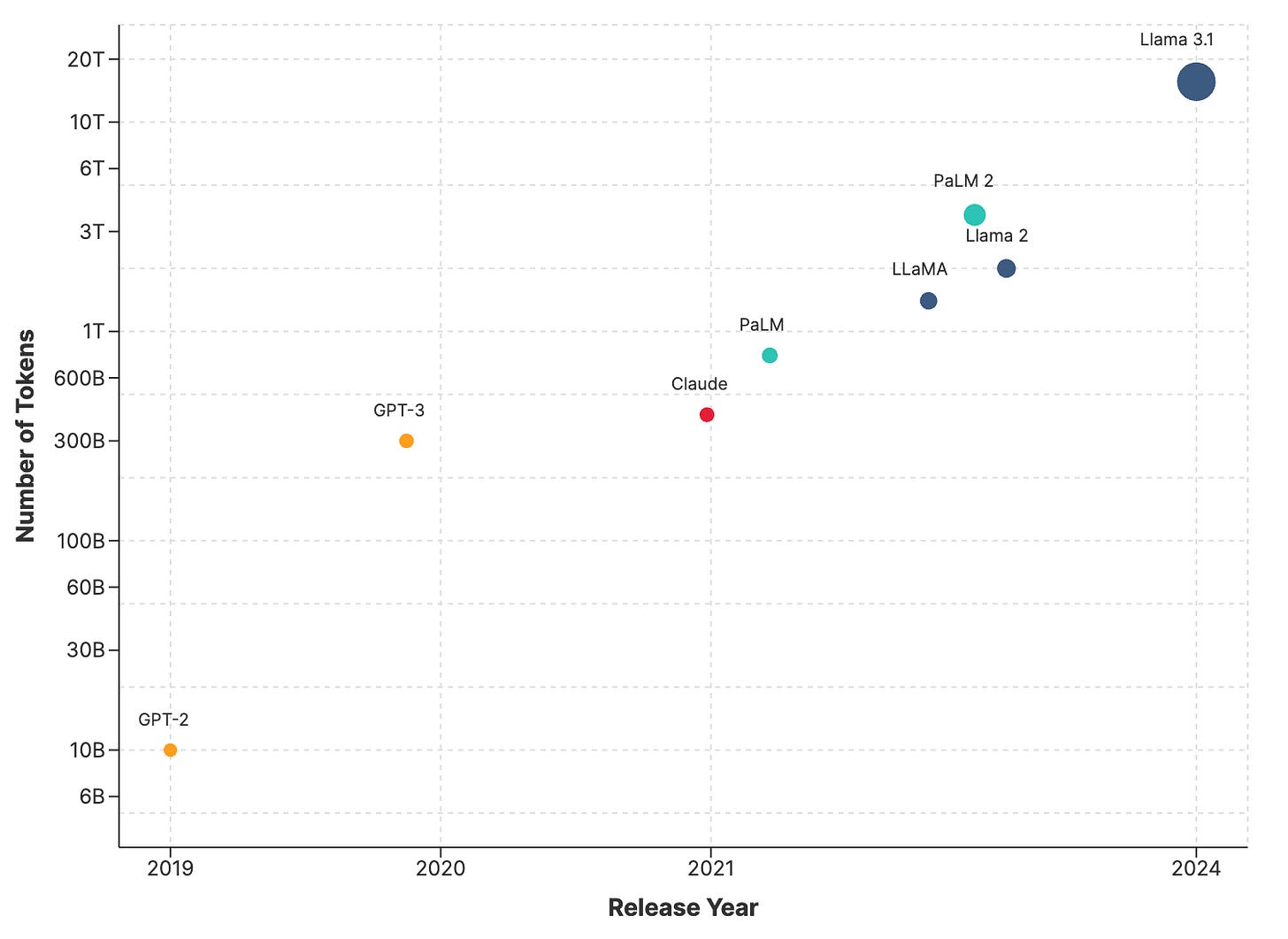

That data volume matters becomes apparent when you plot the number of training tokens (think of a token as 3/4 of a word) against the model release date — newer models are increasingly more capable. For example, there is a test called the MMLU, which contains 15,908 questions covering 57 subjects across STEM, the humanities, and the social sciences. Llama-65B, trained on 1.4 trillion tokens, “failed” the MMLU, scoring only a 63.4, while Llama-3.1-70B, which was trained on 15 trillion tokens, scored an 86.

Companies have caught on and are training each new model on more and more tokens.

Tokenomics

And some companies even believe that more data is the sole differentiator. Nick Grudin, Meta’s vice president of global partnership and content, has said internally that, “The only thing that’s holding us back from being as good as ChatGPT is literally just data volume.”

Closing this performance gap is why companies are willing to pay for more data. But quantity alone isn't enough - quality is paramount.

You can see why quality might matter if you consider the following sentences:

"The big red ball is over there on the green grass near the old oak tree."

"Timothy James Walz (born April 6, 1964) is Minnesota's 41st governor, ex-teacher, and Army veteran."

While these two sentences have roughly the same number of tokens, the second is information-rich — it gives us specific information about a specific individual — while the first is information-poor — it’s a generic sentence. In other words, the tokens from the second sentence are of higher quality.

And higher quality tokens make for better models. Recent models filter data for quality before training. For example, the Llama 3 models were trained on 15 trillion tokens only after extensive data filtering.8

However, quality alone is not enough; the subject matter also counts. For example, if you want your model to imitate a senior software engineer, you need sufficient code tokens from senior software engineers.

These tokens are more scarce than you might think. Entire companies have emerged to source them directly from human labelers. YCombinator-backed DataCurve’s sole focus is to provide “expert quality code data at scale from highly skilled software engineers.” while Scale AI, recently valued at a mammoth $13.8 billion, provides human data labeling for major corporations from Microsoft to Meta to Nvidia.

This is why Reddit can charge Google $60 million. Reddit’s diverse subreddits, from r/computerscience to r/SkincareAddiction/, are filled with expert advice and ideal for training LLMs, and demand has outstripped supply.

From the psychedelic SalesForce commercial Gold Rush.

disclosure: I am a former Reddit employee.

On an annual basis.

What data exactly is unclear. The exact terms were not disclosed.

This would not have been a small purchase. In 2023, Simon & Schuster sold to private equity firm KKR for $1.62 billion.

Dataset choice matters. How much? Some, like OpenAI engineer James Betker, have the view that choices like the model architecture are insignificant compared to picking the dataset.

Meta used Llama-2 to classify web documents as either high or low quality as part of the pre-filtering step. You can imagine that Llama-4 will use Llama-3 as the quality classifier.

Where do you think the next frontiers for generating useful data are?

love it - super thoughtful