Note: This post assumes you’re familiar with FLOPs. If you aren’t, start with this explainer first.

Anthropic intends to train its new model, Claude-Next, using ~100x the compute of GPT-3. That’s 1025 FLOPs or, if you prefer dollars, somewhere between $10-150 million per training run.

That’s a massive gamble on an experiment that may not pan out. Why is Anthropic confident in running this experiment? One plausible explanation is they have a forecast. Their leaked pitch deck says, “We believe that companies that train the best 2025/26 models will be too far ahead for anyone to catch up in subsequent cycles.”

That sounds confident. What’s underpinning that confidence? How might Anthropic be making these forecasts?

Forecasting performance

By building models to predict their models.

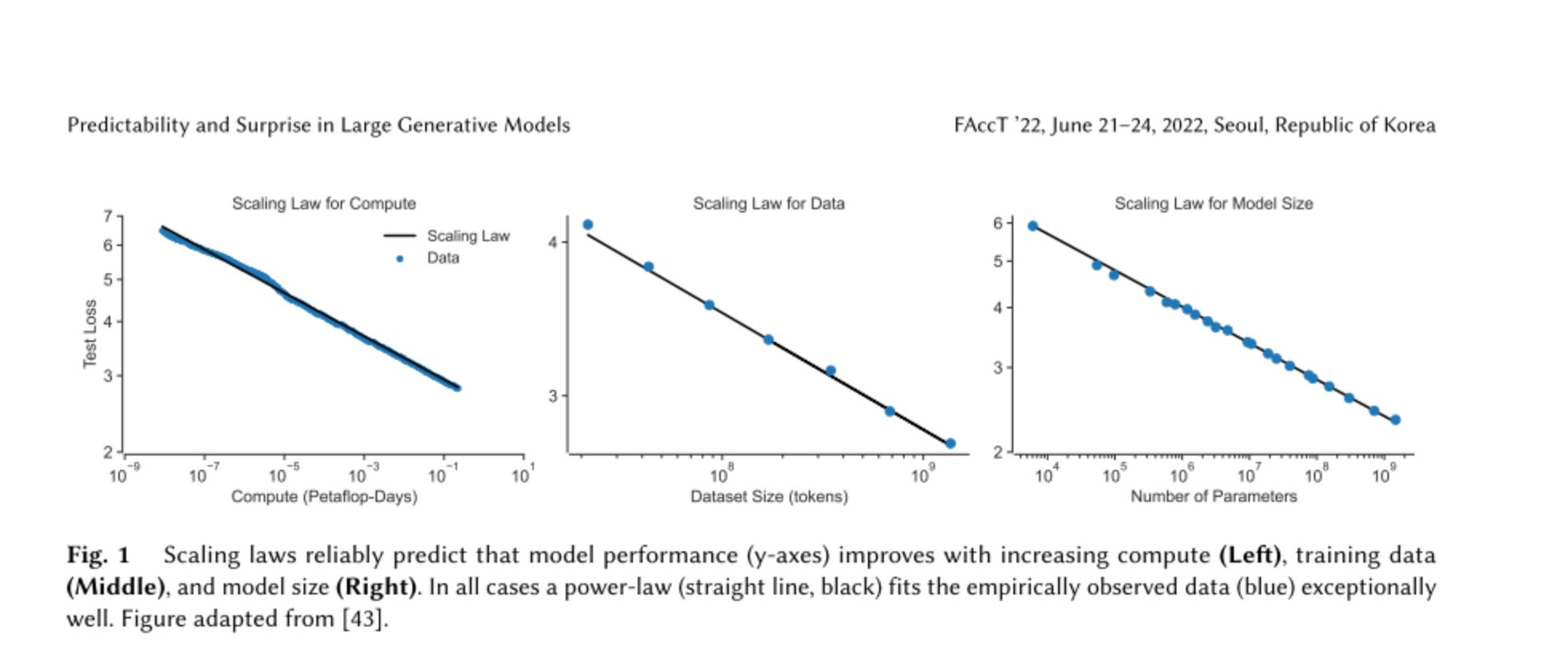

It turns out that error on next-token prediction drops smoothly as we scale compute, dataset size, and parameter count in unison.1 These curves are not niche crank science, like attempts to square the circle, nor are they science awaiting replication like room-temperature ambient-pressure superconductors.

No. Instead, we’ve seen multiple papers replicate smooth scaling laws for Large Language Models; just look at Kaplan et al. [1], Henighan et al. [2], and Hoffmann et al. [3].

If these laws continue to hold, LLMs will continue improving through engineering, not research. Bigger models, more data, more compute - that’s the recipe.2 Additional research breakthroughs will only further accelerate model performance.

How were these laws discovered?

You're probably wondering how researchers uncovered these scaling laws in the first place. Through empirical lab work - not pure theory. Researchers trained LLMs with varying amounts of compute, data, and parameter counts while measuring model performance. Then, they fit curves to the data.

The laws aren’t only a forecast for future model improvement. OpenAI leveraged a version of them to “reliably predict some aspects of the performance of GPT-4 from smaller models trained using 1, 000× – 10, 000× less compute.”3. These predictions were then used to tune GPT-4 instead of running multiple prohibitively expensive training jobs.4

Next-token prediction error isn’t our target

However, as interesting as these scaling laws are, we don’t care about next-token prediction. We care about the model’s abilities on specific, useful tasks like code, drug design, and law.

Instead of measuring next-token prediction, we want to know how scale impacts a model’s “general intelligence,” and one of the best measurements of that is the MMLU (which you can read more about here).

“MMLU” stands for “Multi-task language understanding.” and is a model benchmark containing 15,908 questions covering 57 subjects across STEM, the humanities, and the social sciences with difficulty ranging from “Elementary” to “High School” to “College” to “Professional.” It’s the test Harvard would require models to take for admission.

So the question we really care about answering is, “Does improvement on next-token prediction correspond to increased performance on the MMLU?”.

Llama-2 is a compass

This would, normally, be a difficult question to answer since OpenAI and Anthropic do not provide next-token prediction performance for GPT-4 or Claude-2.

But there’s another option. In July, Meta open-sourced their flagship LLM, Llama-2. Llama-2 comes in different sizes: 7 billion, 13 billion, 34 billion, and 70 billion parameters.

And it comes with details. Specifically, the next-token prediction error and the MMLU scores. Since we have both, we can check the correlation.

Here they are, plotted against each other, with Claude-2 and GPT-4 for reference.

There’s definitely correlation here. Exactly what form, I’m hesitant to say with only four data points, but it’s clear that improving the training loss improves the MMLU, and it does so substantially.

In other words, the above scaling laws aren’t merely hobbyist exploration. They have real forecasting power. This shouldn’t surprise us, given the power of learning to predict the next token.

Extrapolation

So, we have the MMLU as a function of the training loss. Great. We also happen to know how much compute Meta used to train the Llama-2 models, so we can also look at MMLU scores as a function of compute.

Meta used A100-80GB GPUs for training Llama-2. Knowing this, we can straightforwardly compute the FLOPs from the GPU-hours above,5 and we can plot them relative to the 1025 FLOPs of Anthropic’s next model, which is aptly named “Claude-Next.”

I’ve drawn Claude-Next as a vertical line in the above graph since we know Claude-FLOPs but not its MMLU, which I’ll leave to you to guess. While I have high confidence that Claude-Next will outperform Llama-2-70b by a significant margin, I’m wary of extrapolating beyond that claim.

Back to where we started

To return to our original question, “Why is Anthropic willing to spend hundreds of millions of dollars on compute?”. It’s because they understand these scaling laws, and they understand them because they discovered them. Kaplan and Henighan, the lead authors of two of the scaling law papers I mentioned earlier? They were two of the first eleven employees at Anthropic.

References

[1] Jared Kaplan, Sam McCandlish, Tom Henighan, Tom B. Brown, Benjamin Chess, Rewon Child, Scott Gray, Alec Radford, Jeffrey Wu, and Dario Amodei. Scaling laws for neural language models. arXiv preprint arXiv:2001.08361, 2020.

[2] Tom Henighan, Jared Kaplan, Mor Katz, Mark Chen, Christopher Hesse, Jacob Jackson, Heewoo Jun, Tom B. Brown, Prafulla Dhariwal, Scott Gray, et al. Scaling laws for autoregressive generative modeling. arXiv preprint arXiv:2010.14701, 2020.

[3] Jordan Hoffmann, Sebastian Borgeaud, Arthur Mensch, Elena Buchatskaya, Trevor Cai, Eliza Rutherford, Diego de Las Casas, Lisa Anne Hendricks, et al. Training compute-optimal large language models. arXiv preprint arXiv:2203.15556, 2022.

Appendix

If you’re interested in reading more on scaling laws, check out the below.

First-principles on AI scaling (Dynomight, 2023)

chinchilla’s wild implications (LessWrong, 2022)

You must scale them in tandem: too little of any one ingredient becomes a bottleneck for performance. One rough heuristic for this is to set FLOPs ≈ 6ND, where N is the number of parameters, and D is the number of tokens. Google used this heuristic to train PaLM-2.

Recent research also suggests that data quality morphs the shape of these laws.

As you might remember from the compute post, compute costs for training LLMs make your eyes water.

For the curious: OpenAI may have been able to transfer hyperparameters from the smaller models to the larger ones by using methods akin to those in Yang et al.’s Tensor Programs V.

A100s achieve 312 TFLOPS at 100% utilization. This plot assumes the quoted GPU-hours are, by definition, at full utilization.

However, there is another interpretation of a “GPU-hour”, which is that the GPU ran for an hour at less than full utilization (it isn’t clear in the Llama-2 paper which is intended). In this case, you can divide the FLOPs for the Llama-2 models by a factor of ~2 since actual GPU utilization is often much lower - around 40%. Thank you to Joe Gershenson for pointing this out.